So long, selectors

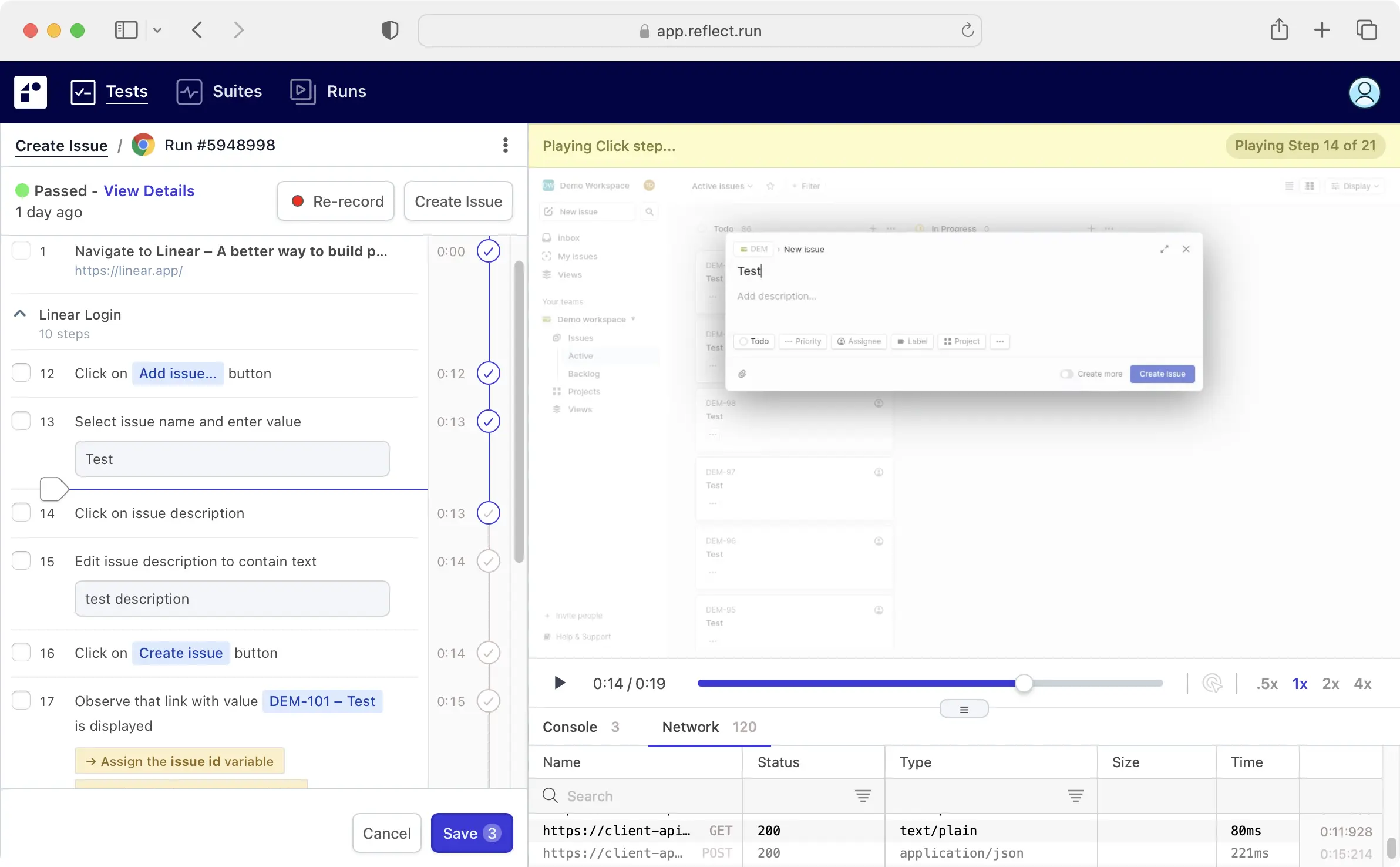

Reflect uses generative SmartBear HaloAI to instantly turn your plain-English test steps into automated actions – no coding required.

Unlike tools that depend on fragile selectors or locators, Reflect adapts to shifts in your app automatically. That means your tests keep working – even when the UI changes.