How to create a leading Quality Assurance process

All software companies establish a QA process to ensure that their applications are always working. During the development cycle, as applications evolve and acquire new functionality, that functionality needs to be tested for quality and correctness. However, existing functionality also runs the risk of breaking as a result of changes—both internal and external. Thus, existing functionality needs to be tested as well to ensure that it hasn’t regressed. Your QA process lays out how your company ensures that it meets both of these requirements.

The QA process varies widely between companies and there is no one size fits all methodology. In this QA process guide, we’ll discuss how QA works, QA implementation, best practices, and how to implement a best-in-class QA process.

What’s the purpose of a QA testing process?

QA testing is used by companies to ensure their software is performing per its specifications. Testing makes use of manual and automated tests to measure performance, find bugs, and to measure quality control (QC). QA ranges from ad-hoc, casual processes to more formal procedures with checklists and assigned tasks.

How a QA team tests your product

The step-by-step QA process can vary if you’re using Scrum or the Agile methodology. At a high-level, here’s an example of how QA teams handle testing and reporting regardless of the software development process.

1. Requirements Analysis

Before performance testing can begin, a QA team needs to determine what they’re going to test. A requirements doc is created that includes the platforms, modules, and instructions for what the QA team is going to test.

2. Creating a test plan

The test plan shows in detail how your team is going to perform tests. This will outline the manual and automated tests to be performed.

3. Designing test cases

The test cases are a predetermined series of steps your QA testers will follow during the QA process. These can range from simple inspections of website interactions to more advanced tests focused on integration use cases.

4. Running test cases

Once testing starts, your team finds and logs any performance issues or bugs that might occur. As they go through each test case, they’ll highlight anything that’s broken so your engineers can focus on fixing the issues.

5. Retesting fixed bugs and running regression testing

Once your team is done fixing the issues found by your QA team, regression testing is done to ensure the recent changes haven’t adversely affected existing features in your software or programs.

6. Reporting

Once the QA team is done with their tests, they’ll create a report showing the bug fixes, outstanding issues to be resolved, and the results of regression tests.

Types of testing to perform

There are dozens of testing activities a QA team can perform. These tests can be done manually or performed through automation. Here’s how manual and automated testing work:

Manual QA testing

Manual testing is the process of testing features and functions from an end-user perspective. Unlike automated testing, manual testing is done by humans. A tester follows a set of predetermined cases to ensure consistent testing and reports on the software performance.

The types of manual tests include:

- Ad-hoc, or exploratory testing

- Acceptance testing

- API testing

- Load testing

- Localization testing

- Compatibility testing

- End to end testing

- GUI testing

- Functional testing

- Visual or UI testing

Automated QA testing

Automated testing is the opposite of manual testing. Instead of relying on a human to run tests, software is used to automate the testing process. Tests are written and run to measure performance and find potential issues. Some automated QA testing platforms integrate with continuous integration (CI) platforms to make automated tests easier to perform. The types of testing that automation can perform include nearly all of the manual tests above.

An example QA process

Reflect is flexible to all sorts of QA approaches. Whether you’re a 1-person operation and just need to offload some of your testing, or you have a full team of dedicated test engineers, Reflect is a tool that anyone can wield. In the below example, we present a simple QA process for a small software company.

Let’s imagine an example QA process where a software company has a handful of developers building the product. Another person spends part of their time testing the application, especially around the times when the development team is deploying new versions of it. So, there are several full-time developers, and one part-time QA engineer.

The developers build the product throughout the week and deploy their changes to a staging environment whenever they have completed a functional change or a new feature. The staging environment mimics the production environment, which your customers use, in terms of configuration but uses different account data (i.e., fake or test account data), and usually is powered by less infrastructure so that it’s cheaper.

The staging environment allows everyone to test the new application changes in an end-to-end way using real (non-customer) data without impacting the production environment. Broadly, you want to test both your staging and production environments, though you’re usually focusing on different things.

Staging focuses slightly more on the new features or the parts of the application that are changing, and production focuses on all functionality as a whole with an eye towards ensuring that the critical functions are always working. Now, let’s establish the testing procedures for this hypothetical company.

How Reflect makes QA testing easier

The most important thing is to actually establish the QA process. This means that everyone should know—and ideally, agree—who will test the different parts of your application. In our scenario, let’s say that developers are required to test their changes in staging. This means that developers would merge and deploy their changes to the staging environment and then create tests for those changes in Reflect.

While the developers can run these tests on-demand, they’ll most commonly set up a schedule to have these tests run every day against the staging environment as long as they are working on the new feature. In this way, the developers can focus on building the product with the assurance that each new piece of the feature doesn’t break earlier parts.

Over time, the new feature is released to all customers and it now runs in production. At this point, the developers can update those tests to run against the production environment rather than the staging environment. Additionally, in our hypothetical scenario, this is the point at which the developers transition ownership of the tests that they wrote to the part-time tester. The part-time tester can then modify the schedule on which the tests run, or classify them into new logical groups with Reflect’s folders.

In addition to managing tests against the production environment, the part-time tester will also periodically perform exploratory testing against the staging and production environments. As popular new use cases emerge over time, the tester can create tests to verify these experiences are always working. In our scenario, the tester often moves tests from an “experimental” folder into a “nightly regression” folder as the tests prove useful.

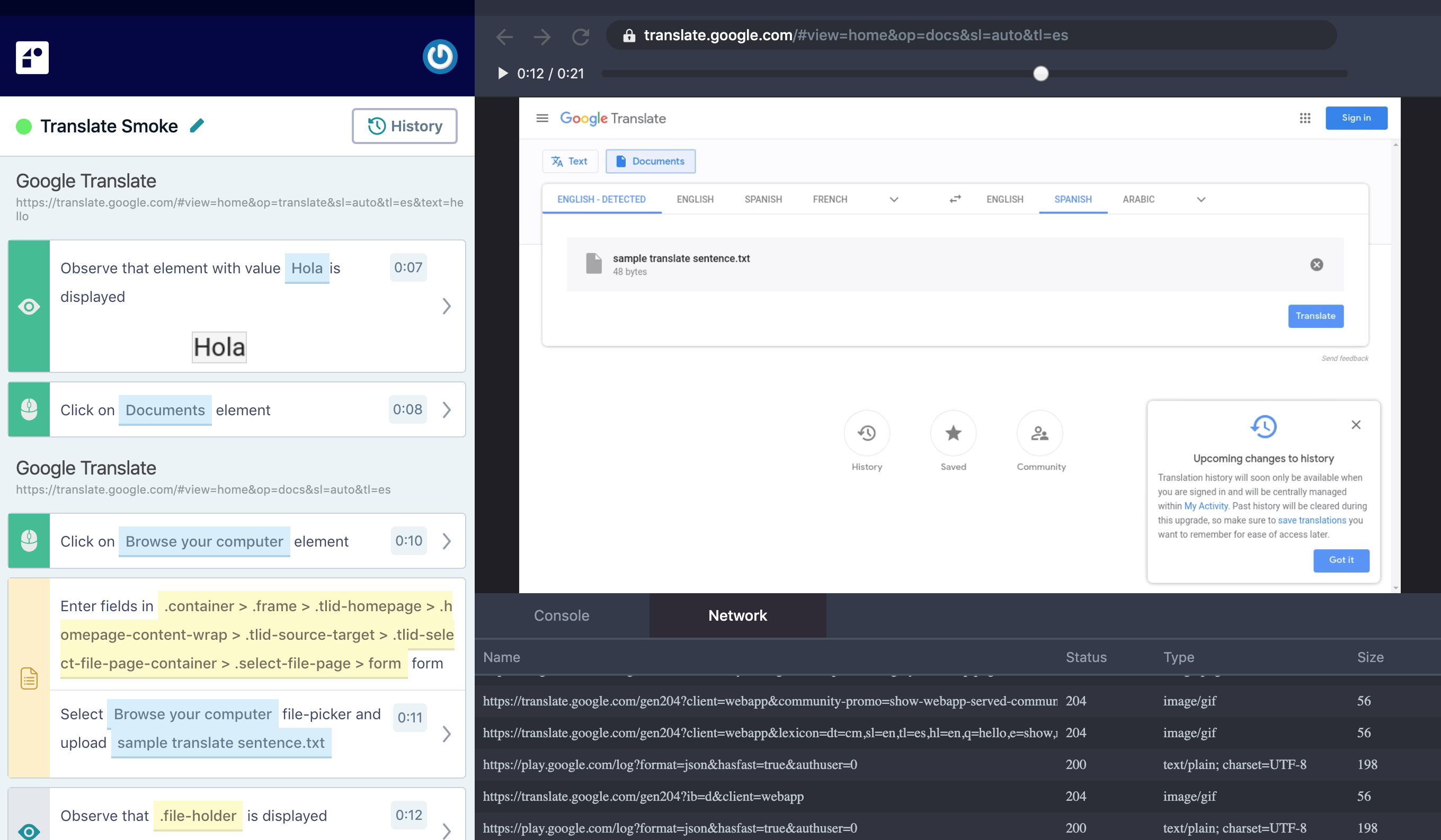

Respond to testing failures

Inevitably, some of your tests will fail over time. Whether it’s intentional application changes or unexpected side-effects of using a new layout framework, you’ll receive email or Slack notifications for failing tests. A primary goal of Reflect is to make it easy to respond to such failures. Reflect’s test result view includes all of the information you need to confirm the issue, reproduce the issue and identify the root cause of the problem.

The test result view displays the steps to reproduce the issue, a video of the execution and the network logs for debugging.

In fact, the test result view is the most efficient way for the tester and developer to communicate about a website bug. The tester first validates that the error is legitimate by viewing the test steps and the video of the test execution in Reflect. The tester opens a new ticket in the company’s issue tracking system, pastes the URL of the test result view, and notifies the developers. (Developers can also be notified directly from Reflect if you want.) Finally, the developers view the failure and work on the fix, optionally debugging the issue using the console and network logs in Reflect.

In the case that the failure is a valid change to the application, Reflect supports several ways to edit your tests. For the purposes of this hypothetical example, let’s consider the simplest case where the visual state of some component in your application has changed. If you had previously created a visual assertion for that component, then your test will fail when you deploy changes to its appearance. Accepting the new visual state of the element is as easy as clicking Accept Changes above the image in the test step detail view.

Conclusion

The QA process takes many shapes and forms at software companies, but the important thing is that you have some process to rely on and facilitate communication. Reflect serves both the individual tester as well as a team of developers by unifying their testing efforts through a common interface: the browser. Furthermore, this is the same interface that your users interact with when using your application. Communication is key for your QA process and Reflect makes sure everyone speaks the same language.