Lightning Web Components

The Lightning Web Components UI framework uses core Web Components standards and provides only what’s necessary to perform well in browsers. Because it’s built on code that runs natively in browsers, the framework is lightweight and delivers exceptional performance. Most of the code you write is standard JavaScript and HTML. via lwc.dev

Lightning Web Components (LWC) is a framework developed by Salesforce to build web applications. It is meant to be a replacement for their older “Aura” framework, and justifies its existence a number of ways. All of these justifications stem from the thinking that “using the platform” delivers better performance, greater portability, and more predictability. If you’ve built software that runs in modern browsers, you already know how LWC works! They’re simply providing some thin wrappers that help solve common problems faced when building webapps, along with tooling that prioritizes writing code that uses the Salesforce platform. From a Salesforce developer’s perspective, this may appear to be the case. In general, code that’s written to conform to the LWC standard looks just like code you’d write without any framework at all and delivers on functionality the browser supports out of the box (scoped styles, reusable “components”, templates, slot, etc).

Shadow DOM

The fundamental building block in LWC is the component, which is meant to essentially be a thin convenience wrapper around the Web Components stack. This spec introduces a number of technologies natively to the browser that previously could only be done with a significant amount of complicated and non-performant JavaScript code. Most of the interesting bits in this spec boil down to use of a “Shadow DOM” which is meant to behave like an isolated black box of reusable functionality in the DOM.

But LWC has a well documented secret. The “Shadow DOM” capabilities that it purports to leverage are 100% faked 100% of the time. Because these browser APIs are not supported in every browser (though all modern browsers support them) LWC decided to introduce a shimmed implementation. This seems acceptable: A small number of users running on older browsers pay a performance price so that Salesforce developers can write code once that runs anywhere. The problem is that by default the shimmed implementation of Shadow DOM is used everywhere. Even in the (majority) of browsers that support it out of the box. Thus, all users end up paying the price.

All of this is to say that Salesforce has contradictory goals with this framework…

- Leverage modern browser technologies to solve problems and

- Support old browsers that do not themselves support those technologies

they have to make a fundamental conceit in the design. The way that conceit is made today is to let developers write code that looks like it utilizes modern browser features, while unconditionally polyfilling nearly every browser API. This has (at least) two objective and measurable consequences:

- The framework is not “fast” because the framework is a ~270kB runtime library that adds overhead to nearly every important getter, setter, and method call

- Here are some unscientific findings using a test bench script shared below wherein I compare the “native” API performance to those that

run through the shimmed layer

childNodes: 1.18x slower textContent: 1.41x slower querySelector: 3.11x slower

- Here are some unscientific findings using a test bench script shared below wherein I compare the “native” API performance to those that

run through the shimmed layer

- The framework introduces complexity and overhead purely to simulate conditions that would be experienced if you were using the platform

It’s all downside. LWC maintainers lose because they have to constantly keep up with an evolving and complex spec. End users lose because they pay for the degraded performance in CPU cycles. Only developers kind of get what they want, which is the feeling of using modern web technologies.

Here’s a challenge: predict what will happen when the following actions are taken:

- In Chrome, open DevTools and find an element in the inspector somewhere on a Salesforce page - maybe something in a Datatable

- Right click on that element and select Copy > Copy JS Path

- Paste the copied value into the console

If you predicted that “a single document.querySelector() statement would be printed, with no chained .shadowRoot calls in

it, and that, when executed, returns null” you are correct! If not, let’s work to understand why this fails.

Shimmed Methods

Reflect is an automated testing platform, so why does the above matter to us? When Reflect is recording or executing

actions, it’s nice to be able to assume that the long-dead practice

of overriding browser APIs is over. We can’t take that for granted: some sites still load MooTools, and some applications really need

the String object’s prototype to include spongebobify(). But overall, the browser’s APIs are generally reliable.

That falls apart completely when interacting with a Salesforce app. Nearly every answer to nearly every introspective API question: “what elements does this Node contain”, “does this selector uniquely identify some node”, “what is the innerText of this node”, etc. comes with a caveat. It’s a lie.

Let’s use the querySelector example from above to illustrate the problem more concretely.

Note: The example here will translate into nearly any Salesforce app, but will not be identical. Things like the specific element we’re targeting and the selectors that Chrome generates are going to vary based on details specific to the app.

Let’s imagine that during a recording, we detected that a user clicked on a “First Name” cell in a datatable. The first thing we need to do is generate a selector for that element. Reflect has proprietary methods for doing this internally, but we can take a shortcut and use Chrome’s (verbose) auto-generated selector to start:

|

|

I’ve simplified it manually to a form that I know also works and is easier to read

|

|

We’ve hit our first issue. If you execute the code above, you’ll get back null. If you manually traverse every node from the

one we’re targeting up to <html> you’d produce the same results. So why is this failing? We can discover why by inspecting

the source code

for LWC. I’ll save you some time.

|

|

Thwarted! LWC overrides the Document.prototype and “breaks” querySelector. That’s okay, we have more than one tool, maybe we

can use querySelectorAll or maybe we can find a different way to generate the selector and somehow use

getElementsByClassName, maybe we can somehow get a reference to the body HTMLBodyElement and use that as the object on which

we call querySelector

We Go First

At Reflect, our browser instrumentation logic always gets to run first (we control the VM, the browser etc). This gives us an

advantage, we can take any action we want “when the page loads”. So what recourse do we have? When the page loads, the browser

APIs are all pure. They cannot have been mutated yet. Ideally we could preserve the original version of querySelector so that

we can execute it in the future without also clobbering Salesforce’s override. Let’s give that a shot…

|

|

Now if we load a page in which our instrumentation is running we can try

|

|

Success! This works and allows us to query the real DOM without LWC running interference. We get back the element we expected to.

Based on our approach above, we’d probably want to do the same for other objects that implement the querySelector method, and

any other methods that LWC overrides.

|

|

Shimmed Getters

Now that we can reliably query the DOM with <obj>.myStoredQuerySelector(), our problems are solved! You can imagine that we

maybe want to query that node for some more specific information. For example, if we wanted to get some information about

child elements, we could use the .children getter

|

|

That’s odd. The information above doesn’t look like a normal HTMLCollection and (in this case) the values for each

index (key) don’t map to what I’m seeing in the browser. But maybe I’m misremembering how HTMLCollections work. If

I call toString() on the value above, I get back the real thing.

|

|

But, again, this is all a lie. The children getter is

overridden,

and that getter’s toString method is

overridden.

That’s frustrating, but we’ve already solved this problem, let’s give the naive solution a try.

|

|

That’s not going to work. The children field is a getter. Even conceptually the above doesn’t make sense. The children

getter is defined on each instance of an Element, you cannot invoke it (e.g. by simply including it as an expression)

without an instance backing it. We need an alternative. One that works well here is to use the introspection APIs to do what

we were trying to express above: “grab a reference to the function that defines the behavior of the getter”

Let’s give this a shot.

|

|

and if we try to invoke that new getter…

|

|

Success! We’re now able to invoke the original .children getter defined on Elements without LWC getting in the way.

Generalize

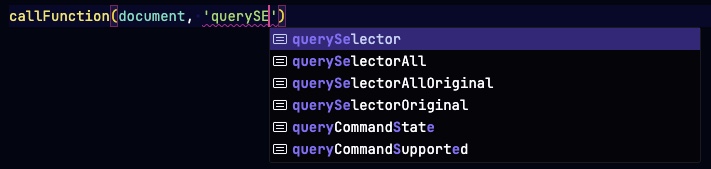

So far every API we’ve relied on has been tweaked by LWC. We need to come up with a generalized solution for both of the cases above so that we can confidently write code that will work as the browser and spec intended. One method (which is not free!) is to define a handful of functions that each handle one of “store and use the original method” and “store and use the original getter”. Those functions, when used together, should result in a few things

- The originals are stored, consistently

- “Invoking” these originals should get the benefits of type safety at build time and via autocomplete in an IDE

- It should be completely generalized so that any method or getter could be called using the same convention

|

|

At this point we can call <object>.<field>Original whenever we’d like, but in isolation the two downsides are

- We’re using typescript and none of these fields are defined as part of the spec, so calling

document.querySelectorOriginal()will result in a Typescript error - It is technically possible for the page to, for whatever reason, delete or redefine these new fields

There are many techniques we could use to address the two problems above. Let’s focus on getting a working system in place that best fits into our codebase. To do that we’ll define two methods, each used to invoke either a method or a getter.

|

|

Most of the apparent complexity above comes from the types, which all disappear at runtime. The two functions above, at runtime, boil down to “get the original version of something if it exists, otherwise fallback to the field however it is currently defined”.

|

|

Plus we get autocomplete:

Conclusion

This system is not perfect and incurs the overhead of defining + executing a function where an inline expression should suffice. Further optimizations are not covered in this article, but the foundation described above allows us to circumvent the limitations that Salesforce incurs on LWC codebases.

LWC is an impressive project, as evidenced by the sheer magnitude of the codebase (there are a lot of details to cover when trying to convincingly fake a decade of standards!). And while I wouldn’t conclusively pass judgement without the full context, the design decisions appear to ignore the most important consumer of the design of a framework: the end user. The project focuses on the developer experience, and uses “does this feel like modern, native web programming” as the criteria by which the product is judged. This introduces headaches for consumers of the runtime (e.g. Reflect) but also adds layers of inexplicable complexity for developers that are trying to “use the platform”.

It’s unclear if or when the shimmed ShadowRoot implementation will disappear. The LWC repo mentions this plan in a number of places but there are no concrete (public) timelines as far as I can tell. Until the polyfills disappear, LWC developers will need to be careful to only use the APIs the way Salesforce intends, and external projects like Reflect will need to come up with ways to break out of the sandbox that LWC apps attempt to force you into.

Test Bench

This is the snippet that was used to compare performance of the native implementations vs their LWC equivalents

|

|