The Problem with Code-based Test Automation

Reflect is a platform for building and running automated regression tests for web applications. The allure of automated end-to-end tests is straightforward because the alternative is manual testing, which is slow and error-prone. Automated tests increase development velocity by executing quickly and producing structured results. However, the challenge historically with automated tests for the web was that the tests had to be expressed in code, which means that a programmer had to build them. More importantly, though, since the tests were in code, they were by definition syntactically specific and exact to the underlying structure of the webapp. This specificity causes major frustration for the tester when the underlying structure of the application changes and the tests break, despite the application’s user-visible functionality being identical. As a result, code-based test automation is inherently flaky and not resilient.

We created Reflect to abstract over the specificity problem inherent to code-based web testing. Our initial approach moved the “interface” for creating tests from a source code file that literally drives the browser to perform the actions to a rich structured format describing the actions of the test. Furthermore, we had the insight that if we’re representing tests in a semantic way (e.g., a text description of test steps), it’s no longer a requirement that the tester needs to be a programmer. We could automatically build those test step descriptions for the tester and eliminate the need for them to understand the HTML internals of modern webapps. Thus, Reflect was born as a no-code alternative for automatically building end-to-end regression tests by simply performing actions within the browser.

Reflect AI: A Purer Abstraction for Testing Intent

Reflect’s record-and-play approach ultimately still relies on translating the test definition into code-based instructions when executing test steps. To improve resiliency to underlying changes in the webapp under test, Reflect captures multiple selectors and uses visible text to locate elements. Nonetheless, a large enough change in the structure of the application can break these syntactical approaches, even though a human comparing the test plan to the live webpage would know what to click on.

To truly close this gap between syntactic approaches and semantic understanding, Reflect now uses an integration with OpenAI to bring a more human perspective to the execution of individual test steps. Specifically, Reflect AI intelligently chooses which element to interact with based on most of the factors a human would use:

- visible text

- location

- tag

- attributes

- relationship to other elements

By emulating how a human would compare the test definition to the current state of the webpage, Reflect AI allows us to select elements for interaction where CSS or XPath selectors have gone stale.

What about data-testids?

HTML attributes like data-testid are a common approach used to mitigate the brittleness of more generic CSS or XPath selectors.

These custom HTML attributes are added specifically to allow code-based automated testing frameworks to identify elements

when the rest of the webpage’s structure may have changed around them.

However, data-testid and similar attributes have limitations for creating a general purpose web regression testing platform.

First, manual testers often cannot modify the codebase.

This means that testers are at the mercy of developers to implement and maintain these attributes.

This tight coupling between the developer and the tester can be difficult to achieve, and

if it is successful, it increases the interdependencies between the two functions and ultimately increases the cost.

A second reason that data-testid attributes are not a silver bullet is that many large enterprise application frameworks,

such as Salesforce, SAP and ServiceNow,

do not expose custom attributes in a standardized or coordinated way.

This means large enterprise web applications can’t enforce code-based rules to aid in their automation.

Thus, attribute-based approaches carry an additional coordination cost at best, and

can’t even be relied upon or implemented at worst.

In comparison, using semantically-aware AI to capture and then later perform test steps allows Reflect to operate at the same level of abstraction as an end-user, which, by definition, is the correct abstraction level for testing end-to-end user experiences.

Selectors are a cache

The good news is that Reflect allows any developer who’s already using data-testid and similar attributes

to benefit from their effort to carefully annotate their application.

This is because selectors (including those using test attributes like the above) are still useful as a shortcut to the AI.

Querying the AI at run time incurs two costs: latency and computation.

It takes time to capture the state of the current webpage, and it takes money (and time) to have the AI compute the selected element.

As a result, if the selectors are still accurate, then we can use them as a sort of “cache” to save time and computation.

Reflect optimistically uses selectors when it can (and favors those using data-testid and the like),

and only needs to engage the AI when the syntactic approaches have become stale.

This offers speed when it’s available, while still enforcing accuracy.

The Evolution of Automated Web Testing

To demonstrate the evolution in automated web testing, we’ve pulled an example of targeting an element using the different approaches described above. The application under test is a recruiting software that helps healthcare facility operators attract and hire talent.

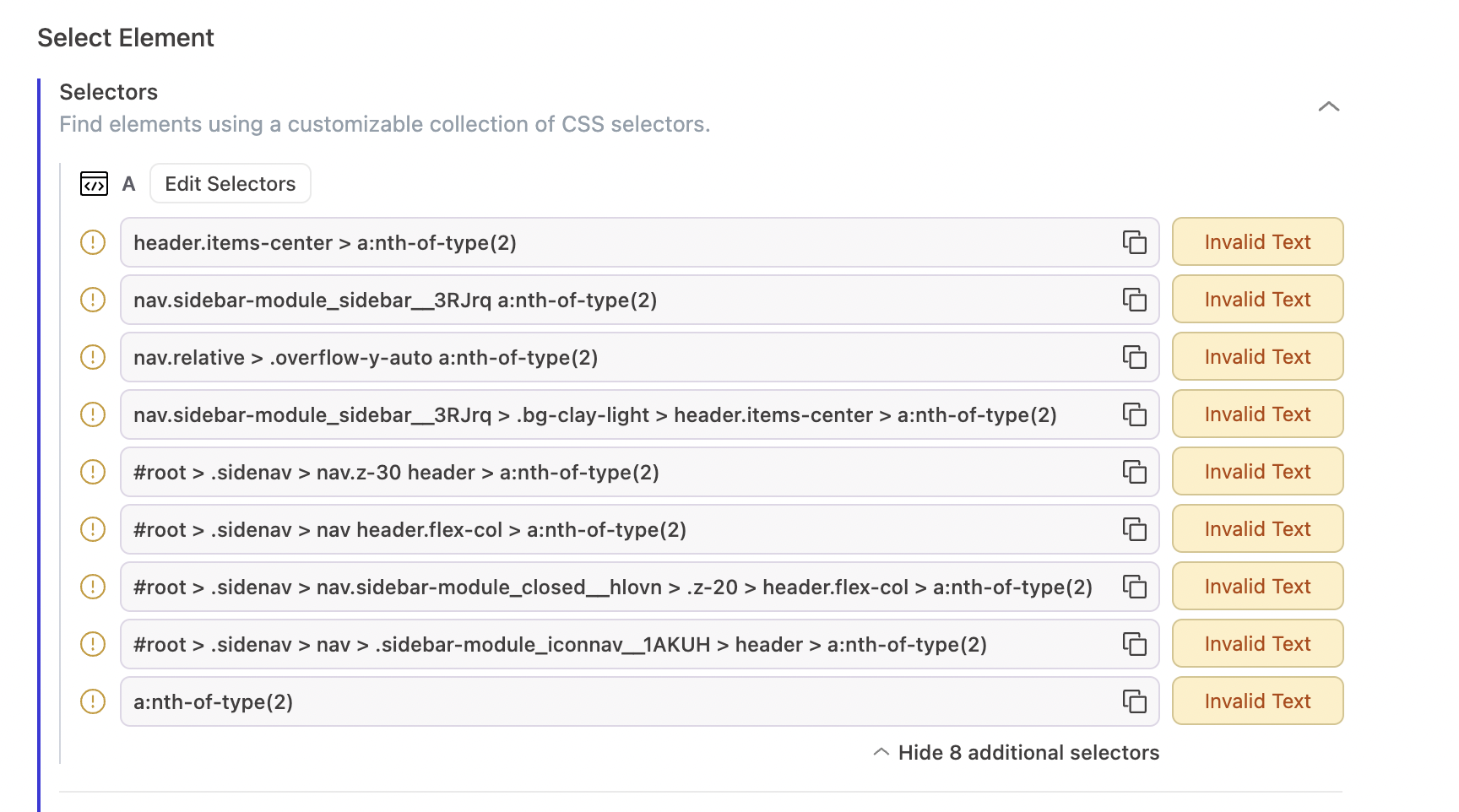

Selector-based targeting

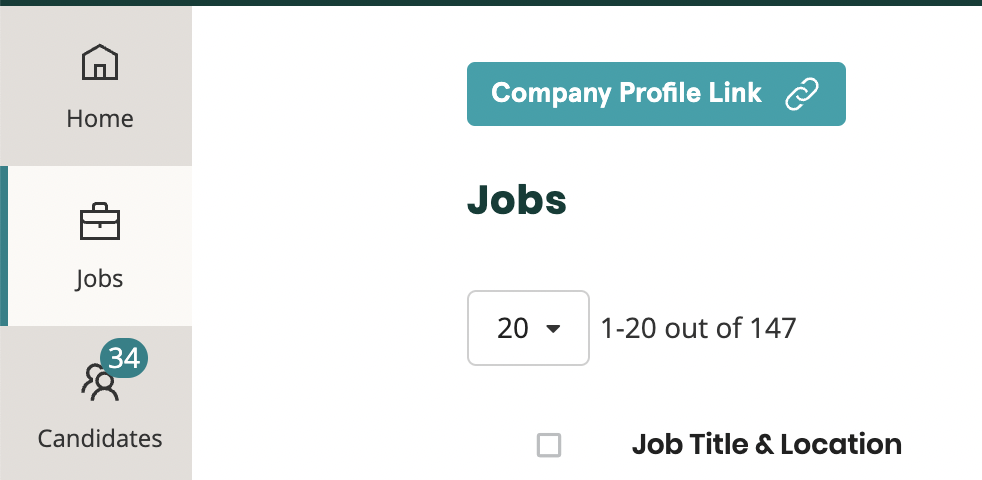

Initially, the application’s menu navigation bar on the left side of the viewport lists “Candidates” as the second option.

A test engineer won’t be able to modify the source code directly to add a data-testid attribute,

so they’ll likely rely on the shortest valid selector to target this menu item.

In this case, it’s a:nth-of-type(2), since the “Candidates” link is the second one on the page.

A single selector is obviously susceptible to becoming stale as the menu navigation changes. This is why Reflect defaults to capturing many diverse selectors, such as the following:

With a number of valid selectors, there is a good chance that one of them will locate the “Candidates” link at run time.

Text-based targeting

Unfortunately, our selectors all suffered from the specificity problem alluded to earlier.

They all enforce that the selected element is the second link in the menu navigation bar (i.e., nth-of-type(2)).

This is problematic when the “Candidates” link moves to the third position in the navigation bar:

As a result of the change, all of our selectors have gone stale, assuming we’re requiring the element to have the “Candidates” text:

This situation is why Reflect supports text-based fallback, wherein it locates elements using their visible text. This is resilient to selectors going stale as long as the visible text hasn’t changed. And in this example, Reflect successfully locates the “Candidates” link in the third position.

AI-based targeting

Unfortunately, the visible text of the “Candidates” link includes a dynamic value: the number of active candidates. At recording time this value was 1, but it will change over the lifetime that the test is in use.

While Reflect supports excluding dynamic portions of an element’s expected text, this still requires knowledge that the text is dynamic and configuring it to be ignored.

To avoid this burden, Reflect AI supports automatically locating elements based solely on a plain text description. Reflect has always generated a simple description for each step in a test, such as “Click on the ‘Login’ button”. But now, Reflect uses this description as a fallback for finding an element when the selectors fail. Furthermore, the step description is editable by the user so they can enhance and enrich it if they want.

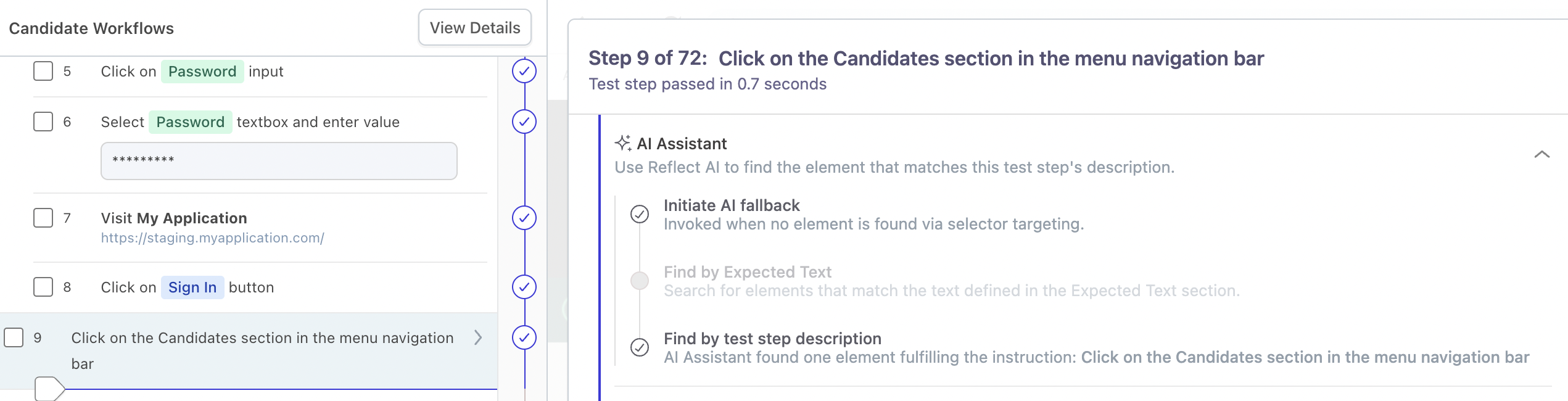

This means users can describe their action in semantic, rather than literal, terms and let Reflect AI make a decision at run time. In this case, using the description “Click on the Candidates section in the menu navigation bar” results in Reflect successfully locating the element and clicking on it:

Forward into the Future

This article describes the high-level evolution of automated web testing from code-based selectors to Reflect AI today. We believe these AI-assisted features are crucial for bridging the gap in understanding between overly-specific code and an expert manual tester. By combining syntactical and AI-based approaches, Reflect offers the speed of code execution with the accuracy of a manual tester. Reflect’s rich expression of intent ultimately improves the efficiency and effectiveness of web application testing.

Reflect is an AI-assisted no-code testing tool that offers the speed of no-code with the resiliency of AI.

Build and manage a robust end-to-end test suite without coding — try it for free.